Since the outbreak of COVID-19, the metaverse has gained attention as a tool for remote interaction. With the advancement of recent 3D sensing technologies, a new remote communication system is emerging that continuously scans the real world in 3D and shares it in virtual spaces. We have named this system "Hybrid Metaverse" and are conducting research aiming for its practical application.

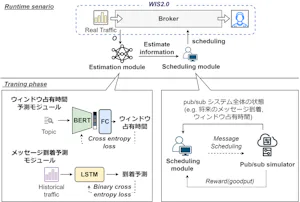

The most critical challenge in realizing the hybrid metaverse is balancing the reduction of 3D video communication volume with maintaining video quality. To achieve this, it is necessary to solve an optimization problem that optimizes video quality under the constraint of limiting data capacity. In order to formulate this optimization problem, modeling video quality is essential. Since video quality is subjective to human perception, modeling it with simple formulas is challenging, and we employ neural networks for this purpose.

By utilizing neural networks for optimization, we are designing an algorithm that optimizes 3D video quality for multiple users under limited network resources, implementing and evaluating its performance. Moving forward, we plan to implement the entire hybrid metaverse system incorporating this optimization method.

Published Papers

- 丸山結, 天野辰哉, & 山口弘純. (2024). 実空間融合型メタバースにおける共有オブジェクト品質の分散型最適化手法. 研究報告コンピュータセキュリティ (CSEC), 2024(37), 1-8.