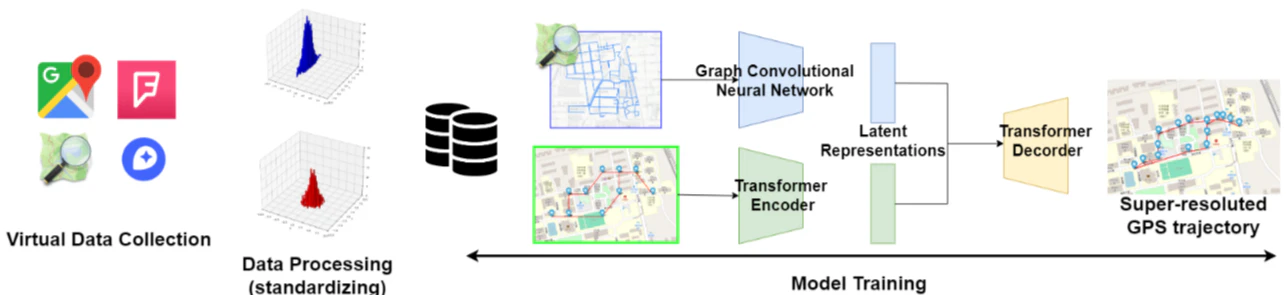

In recent years, mobility data representing the flow of cars and people in an urban environment is expected to be used for many purposes, such as investigating causes of traffic congestions, guiding people in cases of events or disasters, deciding locations of commercial facilities, reparing evacuation orders for disaster resistance, and so on. We can currently grasp mobility data related to vehicles by using GPS trace data, including positions at a certain time from a large number of cooperating vehicles, and use the positions to detect and estimate traffic congestions hourly or daily.

However, the granularities of the mobility data are not always fine, and it is difficult to grasp detailed mobilities, such as the relationships between vehicles-to-vehicles and vehicles-to-pedestrians. In this method, we propose a method for grasping traffic situations using videos taken by an invehicle camera to estimate not only the position and speed of cooperating vehicles but also those of other surrounding vehicles. We first detect surrounding vehicles by performing image analysis using an existing DNN and estimate detailed information such as the distances to the own vehicle, relative speeds, lanes, and directions of detected vehicles. We utilize road signs whose sizes are given to estimate the distance. We also estimate the relative distances to the other vehicles by associating with the road signs’ length in the image. As results of detecting other vehicles with an in-vehicle camera and estimating the distance to them by the proposed method, we can confirm the

accuracy is as follows: minimum error 1.89%, maximum error 9.06%, mean absolute error 7.34%.![]()

published Papers

- 石崎雅大, 廣森聡仁, 山口弘純, & 東野輝夫. (2019). 走行時動画像を用いた周辺車両の位置推定手法. マルチメディア, 分散協調とモバイルシンポジウム 2019 論文集, 2019, 1688-1696.