3-D recognition technology has been attracting attention and developing rapidly in the fields of obstacle detection in automated driving and robotics. A typical sensor used for 3-D recognition is LiDAR (Light Detection And Ranging), which can determine the distance to an object and its shape (3-D point cloud) by using Time of Flight (ToF) between the LiDAR and the object.

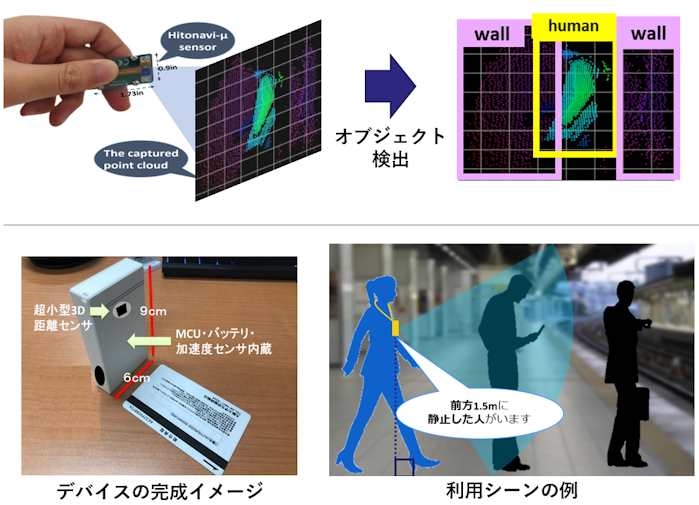

Initially, LiDAR was expensive and large, but advances in research and development have made it low-cost and small enough to be integrated into smartphones. In this research, we are working on a method to detect obstacles from a 3D point cloud that operates on a wearable device incorporating a miniaturized LiDAR sensor.

The realization of devices equipped with this technique will have a significant impact on mobility assistance for the visually impaired. Currently, most visually impaired people use white canes or guide dogs or sighted persons to help them get around. However, using white canes limits the range of obstacle detection, making mobility stressful in urban areas, and guide dogs and sighted persons are costly. We believe that this device will enable obstacle detection over a wider area than with a white cane, and will also enable voice notification by analyzing the surrounding environment.

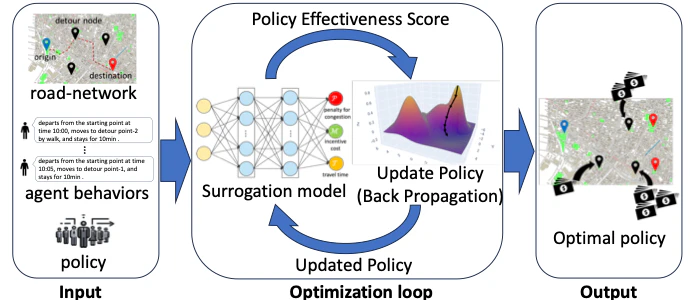

To achieve this, it is necessary to increase the speed of existing methods and remove noise data specific to wearable devices. Most existing object detection methods use high-performance computers, and it is impossible to detect objects on wearable devices, which have inferior processing power. In addition, wearable devices shake when the wearer moves, so some data may not capture the surrounding environment in the direction of movement. Such noise data must be eliminated using inertial sensors mounted on the device. We are currently working on these issues and hope to help realize a barrier-free society.

Published Paper

- Rizk, H., Okochi, Y., & Yamaguchi, H. (2022, October). Demonstrating hitonavi-μ: a novel wearable lidar for human activity recognition. In Proceedings of the 28th Annual International Conference on Mobile Computing And Networking(MobiCom2022, CORE RANK A*) (pp. 756-757). Best Demo Awards, https://dl.acm.org/doi/10.1145/3495243.3558744

- Okochi, Y., Rizk, H., Amano, T., & Yamaguchi, H. (2022, October). Object recognition from 3d point cloud on resource-constrained edge device. In 2022 18th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob) (pp. 369-374). IEEE., https://ieeexplore.ieee.org/document/9941552

- 大河内悠磨, Hamada Rizk, 山口弘純. (2022, August). 可搬型 3 次元空間センシングデバイスを用いた軽量なリアルタイム物体検出. In IEICE Conferences Archives. The Institute of Electronics, Information and Communication Engineers, FIT2022, FIT船井ベストペーパー賞, https://www.ieice.org/publications/conference-FIT-DVDs/FIT2022/data/html/program/pdf/CM-002.pdf

- 大河内悠磨, Hamada Rizk, 山口弘純. (2022). 軽量可搬型 3 次元空間センシングデバイスの設計開発. マルチメディア, 分散, 協調とモバイルシンポジウム 2022 論文集, 2022, 171-178., ヤングリサーチャー賞, https://ipsj.ixsq.nii.ac.jp/records/219598

- Okochi, Yuma, Hamada Rizk, and Hirozumi Yamaguchi. "On-the-fly spatio-temporal human segmentation of 3d point cloud data by micro-size lidar." 2022 18th International Conference on Intelligent Environments (IE). IEEE, 2022., https://ieeexplore.ieee.org/document/9826758