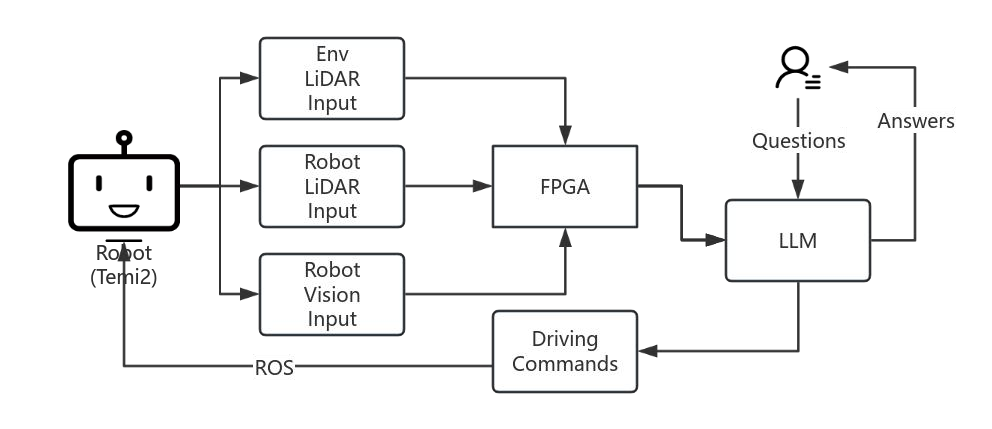

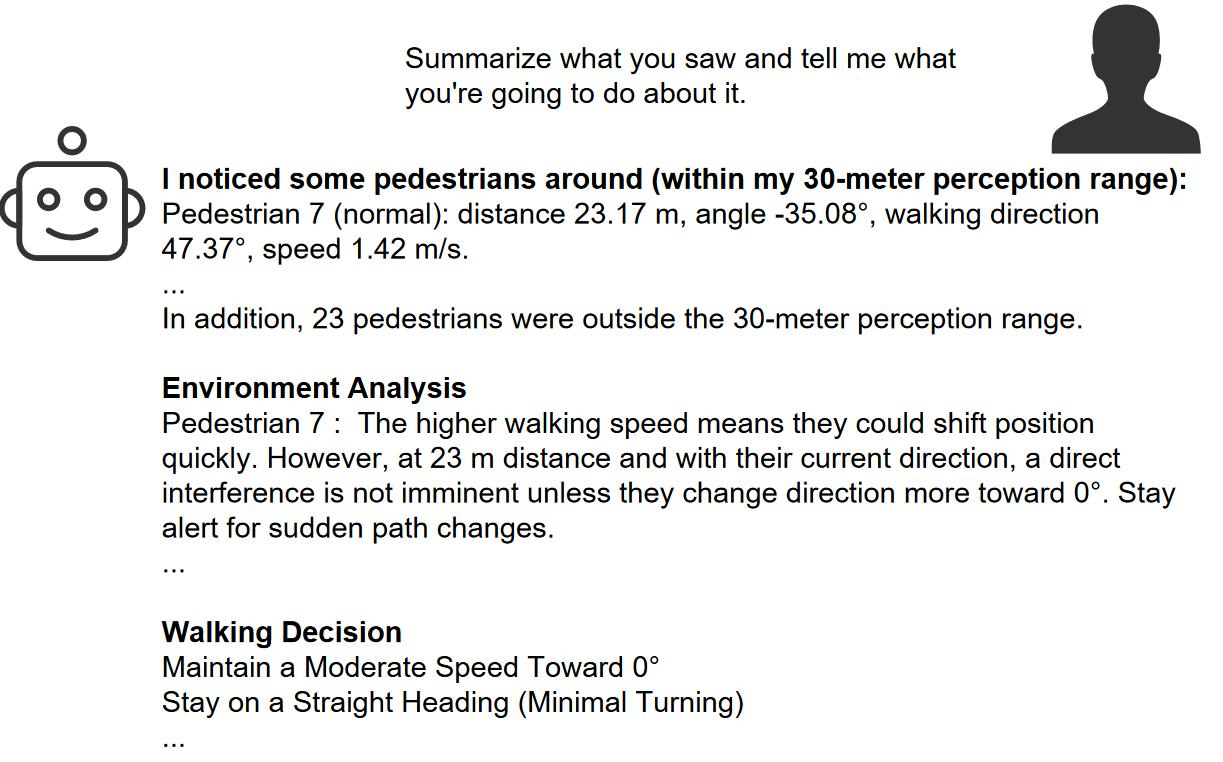

This research addresses the challenges of autonomous robot navigation in dynamic, high-density environments (e.g., train stations and shopping malls) by proposing a novel framework that integrates multimodal sensor fusion (LiDAR and vision) with a Large Language Model (LLM). To overcome the limitations of rule-based methods in handling unpredictable human behavior and dynamic obstacles, our system combines FPGA-accelerated real-time data processing and LLM-driven socially compliant path planning. Specifically, LiDAR point clouds and Triple-RGB camera data are fused on an FPGA using the Hungarian algorithm, while the LLM analyzes pedestrian attributes (age, wheelchair usage) to dynamically adjust navigation priorities. Experimental results demonstrate a 40% reduction in pedestrian prediction error compared to baseline models, with FPGA processing achieving sub-10ms latency. Future work includes enhancing inference accuracy via Q-LoRA and independent FPGA module verification.

Autonomous Navigation Multimodal Fusion FPGA Acceleration LLM (Large Language Model) Socially Compliant Path Planning Dynamic Environment Adaptation