In autonomous driving, sensors play a critical role in perceiving the surrounding environment and ensuring safe driving. However, their performance can degrade under adverse weather conditions or low-light environments, leading to unstable recognition accuracy. This instability makes it challenging for autonomous vehicles to make precise driving decisions. Therefore, accurately recognizing environmental conditions such as weather and time of day is essential for enabling autonomous vehicles to adapt appropriately.

Recent studies have demonstrated that deep learning models, such as Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs), achieve high classification accuracy for environmental conditions. However, similar to the medical field, autonomous driving is a safety-critical domain where human lives are at stake. Understanding the reasoning behind a model's decisions is essential for ensuring trust and reliability. Despite their high classification performance, deep learning models often lack interpretability, making it difficult to explain their reasoning.

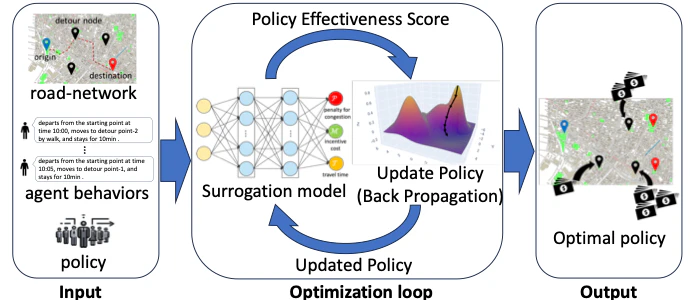

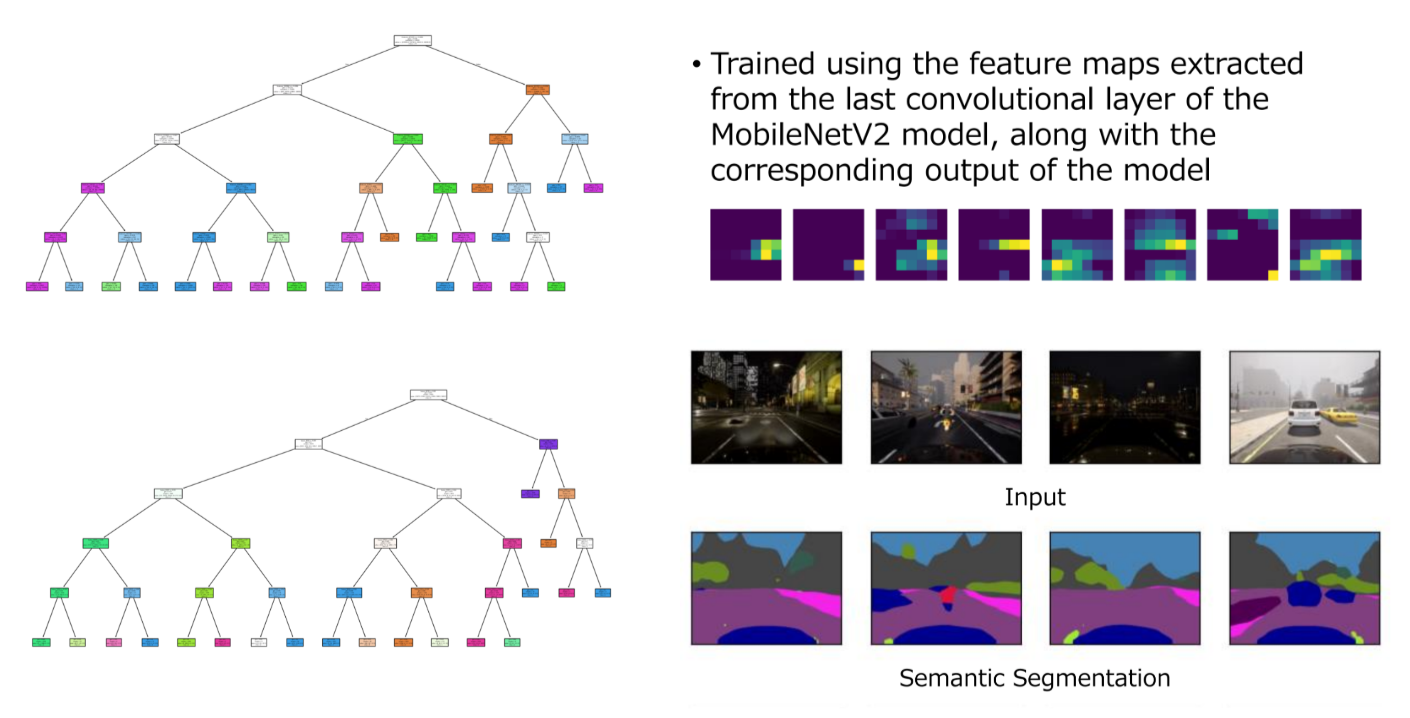

Therefore, in this research, we propose a method that uses video frames from an in-vehicle camera as input, preserving the high classification performance of deep learning while enhancing interpretability through surrogate decision trees. By incorporating this approach, we aim to make the model’s decision-making process more transparent, ultimately contributing to the safety and reliability of autonomous driving systems.

Autonomous Driving Environmental Condition Recognition CNN Surrogate Model Decision Tree